Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

The tech world is buzzing with Nvidia’s latest breakthrough, and rightly so. The Nvidia Blackwell chip is considered the most significant leap in AI computing power in many years, promising to reshape everything from artificial intelligence development to data center infrastructure.

If you’re wondering whether this matters to you-whether you’re a tech enthusiast, business owner, or just someone who is curious about the future-the answer is a yes. The Blackwell architecture isn’t just another incremental upgrade; it’s a paradigm shift that could accelerate AI innovation across industries, from healthcare to autonomous vehicles.

This comprehensive guide will look in-depth at what makes the Nvidia Blackwell chip so special, how it compares to its predecessors, and what it means for the future of artificial intelligence. Here we go.

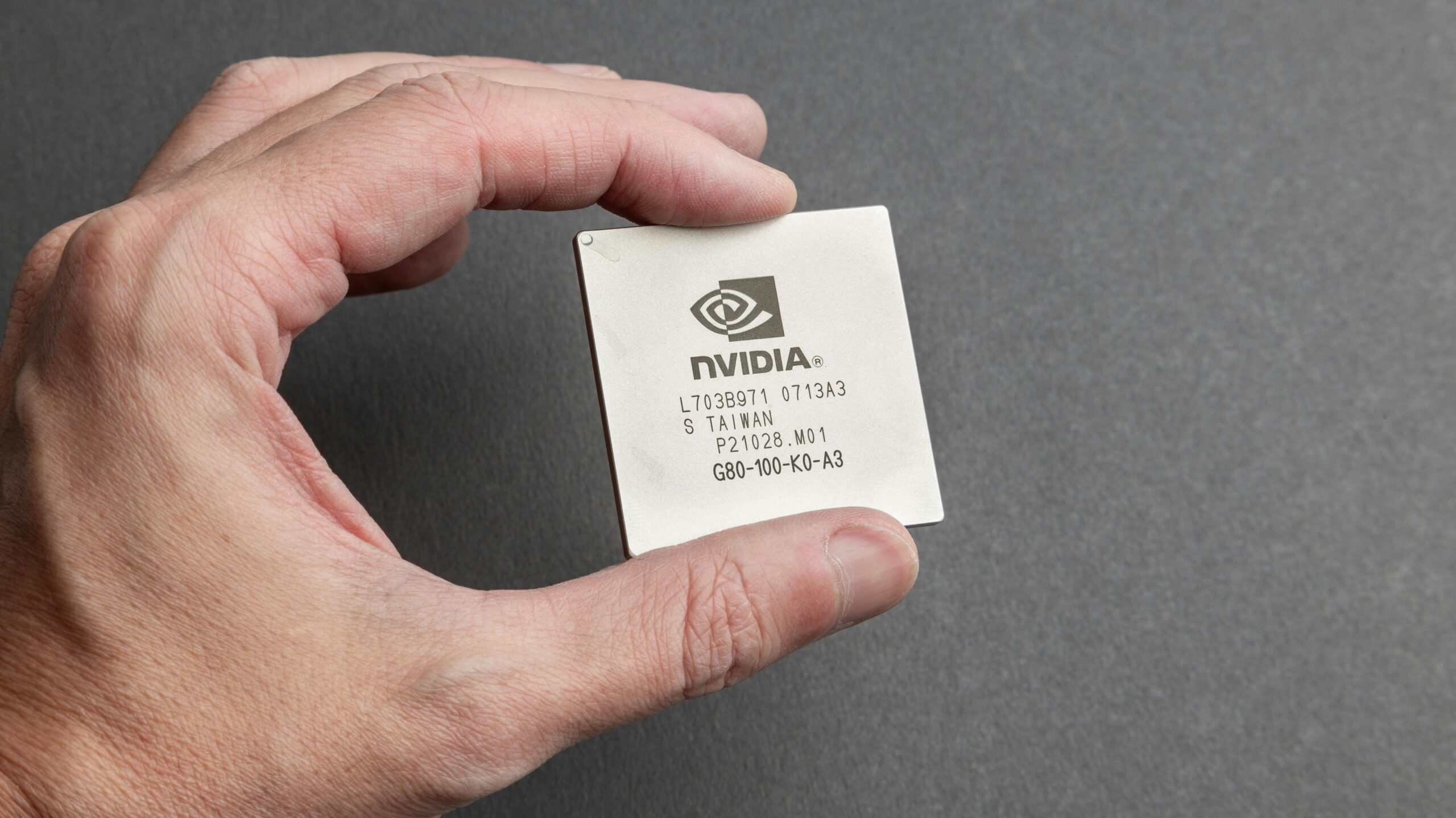

The Nvidia Blackwell chip is the company’s next-generation GPU architecture, designed specifically to handle the massive computational demands of modern AI applications. It is named after the mathematician David Blackwell, this chip represents Nvidia’s answer to the exponential growth in AI model complexity.

At its core, Blackwell is built to train and run large language models, generative AI systems, and complex neural networks faster and more efficiently than ever before. Think of it as the engine that powers the AI tools you use daily—but turbocharged.

The Nvidia Blackwell chip architecture introduces several groundbreaking innovations:

What really makes Nvidia Blackwell chip stand out is its ability to handle trillion-parameter AI models—the kind of massive neural networks that power tools like ChatGPT and Google Gemini. These models require enormous computational resources, and Blackwell delivers exactly that.

The Nvidia Blackwell chip features Nvidia’s second-generation Transformer Engine, specifically optimized for the transformer architectures that underpin most modern AI models. This isn’t just marketing speak-it’s a fundamental redesign of how GPUs process the attention mechanisms that make AI models “intelligent.”

The Transformer Engine uses mixed-precision computing and automatically chooses the best numerical format for different parts of the calculation. This means faster processing without sacrificing accuracy-precisely what you need when you’re training models with billions or trillions of parameters.

Perhaps the most impressive engineering feat in the Nvidia Blackwell chip is its multi-die design. Instead of trying to cram everything onto a single silicon chip, Nvidia engineered a way to connect two GPU dies so tightly that they function as a single, massive processor.

The advantages of this approach are as follows:

The connection between these dies is so fast running at 10 terabytes per second that there’s virtually no performance penalty. It’s like having two supercomputers that think they’re one.

Blackwell introduces the fifth generation of Nvidia’s NVLink interconnect, which lets multiple GPUs talk to each other at incredible speeds. This is key to distributed AI training, where a single model might be spread across dozens or even hundreds of GPUs.

Equipped with NVLink 5, Nvidia Blackwell chip systems can achieve up to 1.8 terabytes per second of bidirectional bandwidth between GPUs. To put that into perspective, that’s enough bandwidth to transfer the entire contents of a Blu-ray disc in under a tenth of a second.

We fully understood where the Nvidia Blackwell chip fits in the lineup of Nvidia by considering its predecessor, Hopper Architecture (H100 Chip). Where Hopper itself was revolutionary, Blackwell pushed the envelope further.

| Feature | Hopper (H100) | Blackwell (B100/B200) |

|---|---|---|

| FP4 Performance | Not available | Up to 20 petaFLOPS |

| Training Speed | Baseline | Up to 2.5x faster |

| Inference Speed | Baseline | Up to 5x faster |

| Memory Bandwidth | 3 TB/s | 8 TB/s |

| Power Efficiency | Baseline | 25x better for inference |

These numbers tell a compelling story. Nvidia Blackwell chip doesn’t just edge out Hopper—it leaps ahead in almost every meaningful metric.

These performance gains now directly translate into practical benefits:

For companies making investments in the infrastructure of AI, these aren’t mere niceties; rather, they are game-changers with effects on the bottom line.

Nvidia isn’t launching just one Blackwell chip: several different variants have been announced, aiming at different uses and price points.

The B100 and B200 represent flagship Blackwell GPUs targeted at data centers and enterprise AI workloads. While the B200 is positioned as the most premium model with peak performance, the B100 strikes more of a balance.

These chips are ideal for:

The GB200 NVL72 takes the Nvidia Blackwell chip to its logical extreme: this system integrates 72 Blackwell GPUs and 36 Grace CPUs-the Nvidia ARM-based processor-in a single rack-scale unit.

That’s a configuration for the most challenging AI workloads anyone can think of systems capable of training models with tens of trillions of parameters, way beyond what’s currently doable with existing architectures.

The GB200 delivers:

Nvidia is also collaborating with various cloud providers, such as AWS, Google Cloud, and Microsoft Azure, to make Blackwell accessible via cloud services. That means you won’t have to purchase the hardware upfront, but instead, can rent it for an hour or even a minute.

This democratization of access is important; it means startups and researchers can access cutting-edge AI infrastructure without massive upfront capital investment.

And the power of Nvidia Blackwell chip is not merely theoretical, it enables applications which were previously impractical.

The most direct use case would be training and deploying large language models. Models like GPT-4, Claude, and Gemini require huge amounts of computational resources, and Blackwell makes this much more efficient.

With Nvidia Blackwell chip, AI researchers can:

Generative AI tools for images, video, music, and text are increasingly sophisticated. Nvidia Blackwell chip provides the horsepower needed to run these models at scale.

Imagine being able to create photorealistic videos from text descriptions in real-time, or even generating entire virtual worlds for gaming and simulation. These applications demand huge amounts of parallel processing power-exactly what Blackwell delivers.

AI is revolutionizing the state-of-the-art in many fields, including protein folding, molecular dynamics simulation, and drug discovery. Such applications typically run complex simulations, which can take several months on traditional hardware.

Blackwell considerably hastens this process:

Self-driving cars, delivery robots, and industrial automation all rely on AI models that need to process sensor data and make decisions in real time. Blackwell’s inference capabilities make these systems more responsive and reliable. Chip efficiency also matters for edge deployment.

You might not put a full B200 in a car, but the architecture improvements trickle down to their edge computing products at Nvidia.

Beyond this, however, Nvidia Blackwell chip holds important implications for companies investing in AI infrastructure.

But one of the most compelling aspects of Blackwell is its impact on TCO. Although the chips themselves are expensive, they can actually lower overall costs by requiring fewer units to accomplish the same work.

Consider these factors:

Companies that adopt Blackwell early, gain several competitive advantages. They can iterate on AI models faster, deploy more sophisticated features, and operate at lower cost than competitors using older hardware.

Speed is everything in the AI race. Being able to train a model in days, not weeks, may mean the difference between leading your market and playing catch-up.

For organizations planning their roadmap, Blackwell chip represents a critical inflection point: do you invest now, or wait for prices to come down and availability to improve?

It all depends on your particular circumstances:

The Nvidia Blackwell chip shipped to partners and customers in late 2024, with broader availability rolling out through 2025. But demand is likely to far outstrip supply in the beginning.

Though Nvidia hasn’t published official retail prices, industry analysts estimate that:

These prices might sound steep, but remember you’re paying for bleeding-edge technology that can handle workloads impossible on previous generations.

The manufacturing process of Blackwell chip got off to a rocky start, which is not unusual for such novel, highly complex technology. Nvidia and its manufacturing partner TSMC have been working to ramp up production.

Key partners getting early access include:

If you are going to buy Blackwell hardware, expect lead times of several months for most of 2025.

Nvidia does not exist in a vacuum. There are several competitors seeking a market share in the lucrative AI chips market.

AMD’s Instinct MI300X is its answer to Nvidia’s dominance in AI computing, but early benchmarks suggest Blackwell keeps Nvidia’s performance leadership intact.

AMD’s strengths include the following:

Intel has been aggressively marketing its Gaudi family of AI accelerators, especially for inference workloads. While not competing directly with Blackwell on raw performance, the products are an alternative for more budget-conscious buyers.

Companies like Google (TPUs), Amazon (Trainium and Inferentia), and Microsoft (Maia) are making their own custom AI chips. These custom solutions give companies an advantage because each is optimized for a particular workload, but they are not available to the market in general. That’s both a challenge and validation for Nvidia, a sign that the market has immense value in dedicated AI hardware, but also one that may fragment the market.

Nvidia has already begun talking about what’s next. The company runs on an approximate two-year release schedule for its major architecture releases, and we could possibly see Blackwell’s successor in 2026-2027.

While there is scant information, industry observers expect continued emphasis on:

The evolution of the AI chips is driven by the evolution of the models themselves. With models getting increasingly sophisticated and diverse, hardware needs to adapt.

The key trends shaping the future include:

Nvidia Blackwell chip represents a milestone on this journey but by no means the destination.

No technology is perfect, and Blackwell has its challenges.

Efficiency improvements aside, Blackwell systems still consume a great deal of power: a fully loaded GB200 rack can draw hundreds of kilowatts, and needs sophisticated cooling infrastructure.

This creates practical limitations:

While the industry has run with Nvidia’s CUDA platform as the standard, harnessing Blackwell’s full capability demands fresh software. Developers need to optimize code for new architecture if maximum benefits are to be seen.

The good news: Nvidia has strong relationships with developers and robust tools in place to ease this transition.

Nvidia Blackwell chip systems are expensive, acting as a barrier to entry for smaller organizations and researchers. While cloud access democratizes availability, it does not remove the fundamental expense of cutting-edge AI infrastructure. The result could be further concentration of AI development capabilities among well-funded organizations-a situation that raises some concerns in the broader AI community.

If you are convinced that Nvidia Blackwell chip is the right choice for your needs, here is where you start.

The following are ways through which academic researchers and developers access:

If you are a startup or smaller business, cloud access is likely to be your best bet:

Growing energy consumption by the AI industry is a legitimate concern, and the Nvidia Blackwell chip factors into this conversation.

While Blackwell systems use a great deal of power in absolute terms, they’re considerably more efficient per computation compared to previous generations. This means more AI capability per kilowatt-hour.

Nvidia claims Blackwell can achieve up to 25x better energy efficiency for specific inference workloads relative to Hopper. For organizations running AI at scale, this translates to meaningful reductions in carbon footprint.

Nvidia and its partners are pursuing a number of sustainability initiatives:

The conversation around AI and its sustainability is not black and white. While it is true that training large models consumes a lot of energy, AI also makes many solutions to environmental challenges viable.

The launch of Nvidia Blackwell chip has generated a significant amount of excitement and analysis by industry experts.

Technology analysts generally view Blackwell’s capabilities as impressive while signaling some practical issues in deployment. The consensus is that Blackwell maintains Nvidia’s technological leadership but is facing increased competition.

Key themes in analyst commentary:

Early customers report impressive results. For example, OpenAI, Meta, and other AI leaders have highlighted the way Nvidia Blackwell chip lets them take on more ambitious projects faster and at a lower cost.

However, there is also recognition that to fully optimize for Blackwell, time and engineering effort are needed. It’s not plug-and-play; workflows and code need to be adapted to maximize the benefits.

The Nvidia Blackwell chip is designed mainly for training and running large artificial intelligence models, such as large language models like ChatGPT generative AI systems, scientific simulations, and data analytics. It’s built to handle the most computationally intensive AI workloads that require massive parallel processing power.

While official pricing depends on the configuration and customer, industry estimates peg individual B100/B200 GPUs at between $30,000-$40,000. Complete GB200 rack-scale systems can cost several millions of dollars. Cloud providers will also offer hourly rentals, presumably in the range of $3-$8 per GPU hour depending on the configuration and service level.

The Nvidia Blackwell chip started shipping to select partners and customers in late 2024, with broader availability rolling out through 2025. However, high demand means that most customers should expect significant lead times. Cloud providers AWS, Azure, and Google Cloud will begin offering Blackwell instances starting in 2025, making them easier to access for organizations not purchasing hardware directly.

Compared to Hopper, Blackwell shows significant improvements across nearly all the metrics: up to 2.5x faster training speeds for large language models, up to 5x faster inference, and up to 25x better energy efficiency for certain workloads. Blackwell features enhanced memory bandwidth of 8 TB/s versus 3 TB/s and supports new precision formats not available in Hopper.

Blackwell introduces several architectural innovations tailored for modern AI workloads, including a second-generation Transformer Engine optimized for neural network architectures used in most AI models; multi-die design for larger, more powerful configurations; fifth-generation NVLink for faster GPU-to-GPU communication; and enhanced memory bandwidth for feeding data to processing cores more quickly. Put together, these features make Blackwell significantly more capable at AI than previous generations.

Yes, though probably not by purchasing hardware directly. The most accessible path for smaller organizations is through cloud computing providers. AWS, Microsoft Azure, and Google Cloud will offer Blackwell-based instances that can be rented by the hour, eliminating the need for large upfront capital investment. This pay-as-you-go model makes cutting-edge AI infrastructure accessible to organizations of all sizes.

Power requirements differ depending on the configuration. Individual B100/B200 GPUs have thermal design powers of around 700-1000 watts. Complete GB200 NVL72 rack systems can easily use several hundred kilowatts, demanding highly robust electrical infrastructure together with very powerful cooling systems. Any organization planning to deploy Blackwell needs to make sure its data centers can support these power densities, including adequate cooling.

The main competitors for Nvidia include AMD with their Instinct MI300 series, Intel with their Gaudi accelerators, and custom silicon from the large tech companies: Google with their TPUs, Amazon and its Trainium/Inferentia, and Microsoft with Maia. While all these options offer compelling features and often lower costs, Nvidia retains a significant advantage due to its performance excellence and mature CUDA software ecosystem.

The Nvidia Blackwell chip is a true generational leap in AI computing capability, offering meaningful performance gains, better energy efficiency, and the ability to address AI challenges that were utterly impractical with previous generations of hardware.

For organizations at the leading edge of AI development-be that training state-of-the-art language models, conducting groundbreaking scientific research, or building the next generation of AI products-Blackwell offers compelling advantages that justify the investment. Positioned as the tool of choice for demanding AI workloads, raw performance, improved efficiency, and architectural innovations make it compelling.

But not everyone needs Blackwell. If your AI needs are modest, or if you invested recently in Hopper-generation hardware, it probably makes more sense to wait. Over time, the technology will become more available, perhaps at lower cost, and software ecosystems will mature.

The bigger picture is unmistakable: Blackwell is accelerating the pace of AI innovation. It’s enabling researchers and companies to pursue more ambitious projects, iterate faster, and deploy more capable AI systems. Whether you adopt Blackwell immediately or watch from the sidelines, its impact on the AI landscape will be profound.

If you’re serious about using Blackwell’s capabilities, begin by assessing your current and future AI infrastructure needs. Contact Nvidia to discuss your requirements or one of the authorized partners, or check out offerings through cloud providers if you want to experiment with the systems based on Blackwell without making a capital commitment.

The AI revolution is accelerating, and Blackwell is the engine powering the next phase. The question isn’t whether this technology matters, but whether you’ll be part of the organizations shaping the future with it.

Read More : Alibaba Qwen: The Open Source AI Revolutionizing Language Models.

Read More : Top 10 AI Powered Security Solutions.

Read More : Top 5 AI Tools for Creators & Professionals.